Face analysis software comparison: Affectiva (AFFDEX) vs OpenFace vs Emotient (FACET)

In my previous post, I compared emotion recognition performance between OpenFace and FACET which suggested that OpenFace provides a better face detection algorithm but slightly less accurate action unit detection.

In this update, I compare Affectiva’s emotion recognition algorithm (AFFDEX) and look at how it compares to OpenFace and FACET.

Face Detection Success

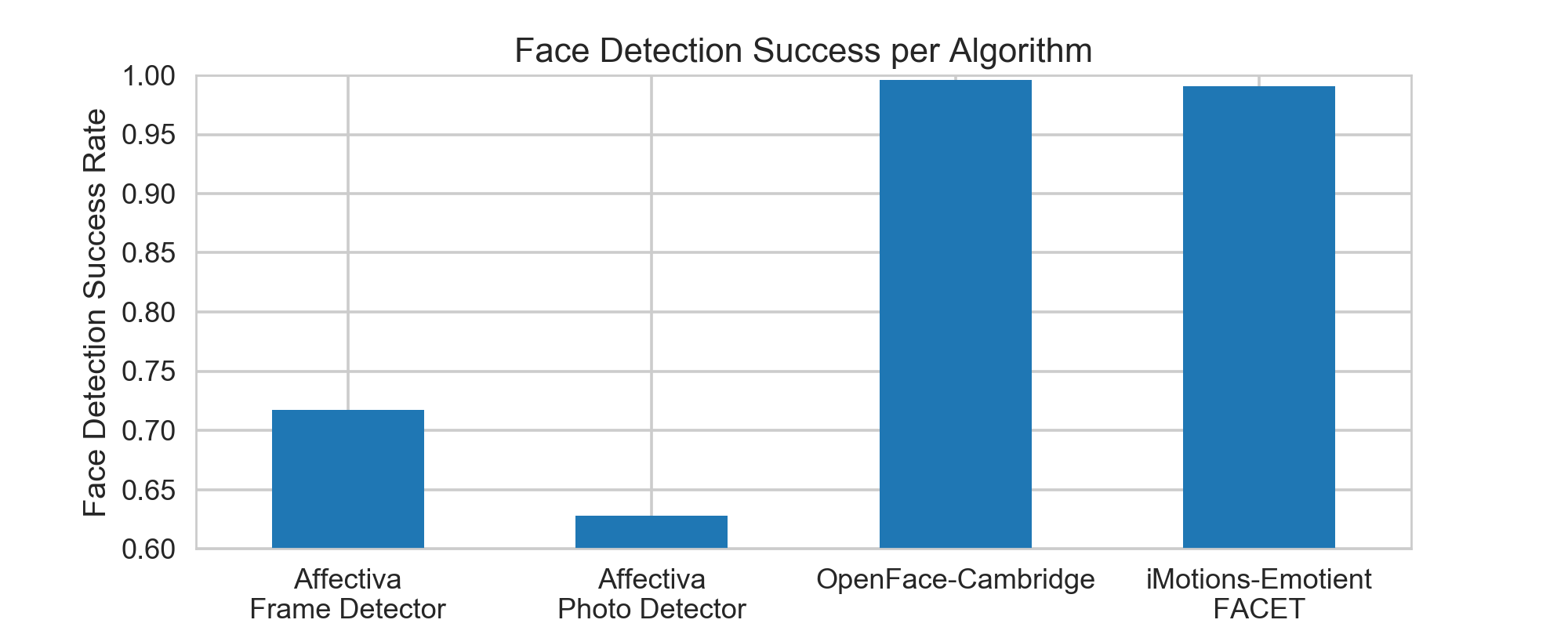

First of all, I compare the face detection success rate of the algorithms. As mentioned in my tutorial on using Affectiva’s JavaScript API, Affectiva offers a frame detector and a photo detector. I ran my sample video through both the frame and photo detectors at 1hz using Affectiva’s most recent API Affdex 3.2. Here are the percentages of the video that each algorithm was able to successfully detect a face.

I was surprised to see that OpenFace and iMotions both outperformed Affectiva in face detection. Affectiva’s success rate of 72% meant that emotion extraction through Affectiva provided 25% less data than through OpenFace or iMotions. Although changing the parameters might improve Affectiva’s detection, the maximum frame rate I could process was 20hz but it seemed to provide worse results.

Emotion Detection Comparison

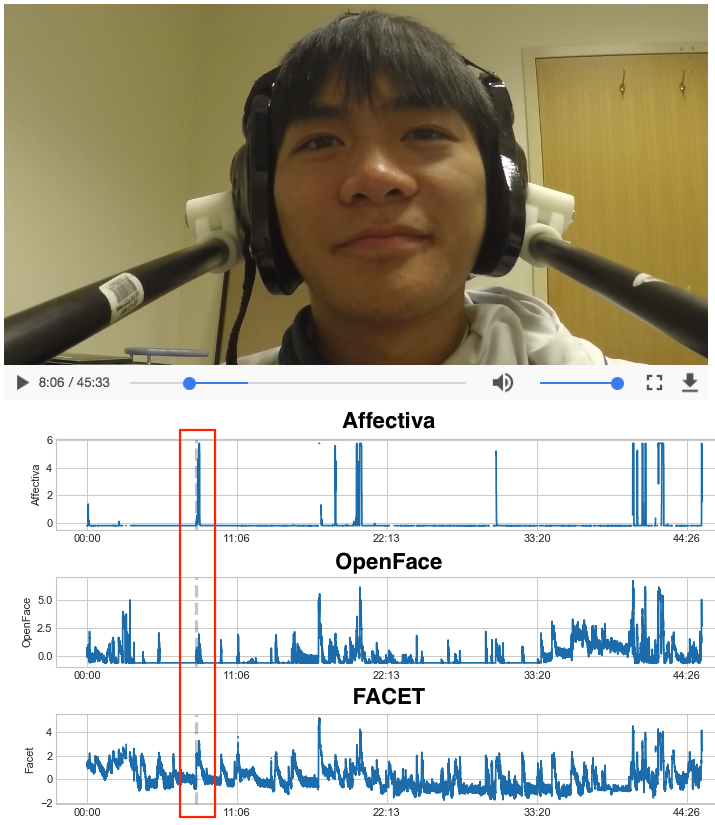

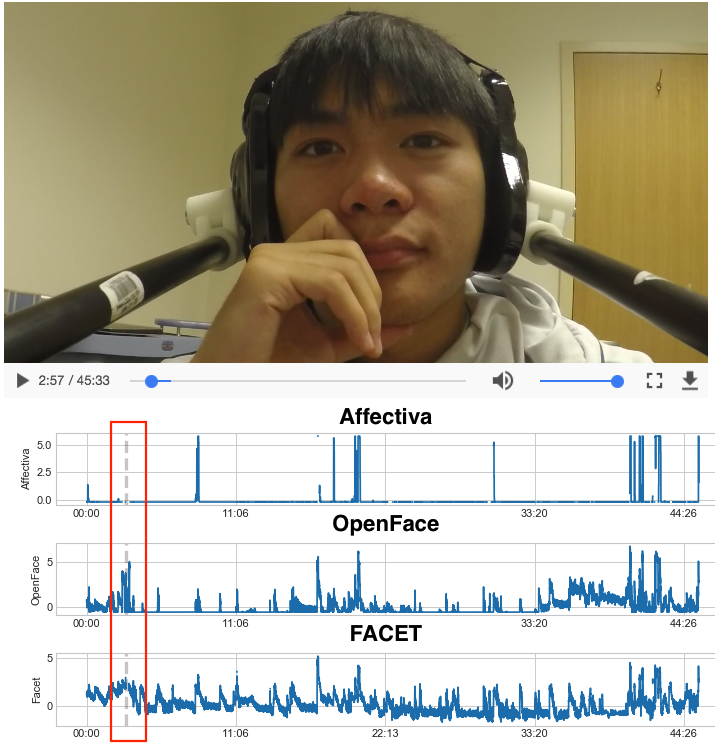

Each software provides slightly different emotion predictions but smiling is a universal feature that can be compared across all three algorithms. From here I only compare the results from Affectiva’s frame detector as it provided the better result. Here is a simple timeseries plot of smile detection for each algorithm (units are standardized for comparison).

Here we can see that Affectiva definitely seems to miss out on many timepoints at which OpenFace or FACET predicts a smile. All values are standardize for plotting but it is pretty clear that Affectiva’s algorithm is less continuous than the other algorithm (Affectiva predictions are given on a 0-100 probability scale) suggesting that Affectiva might be missing the nuanced intensity differences of smiles.

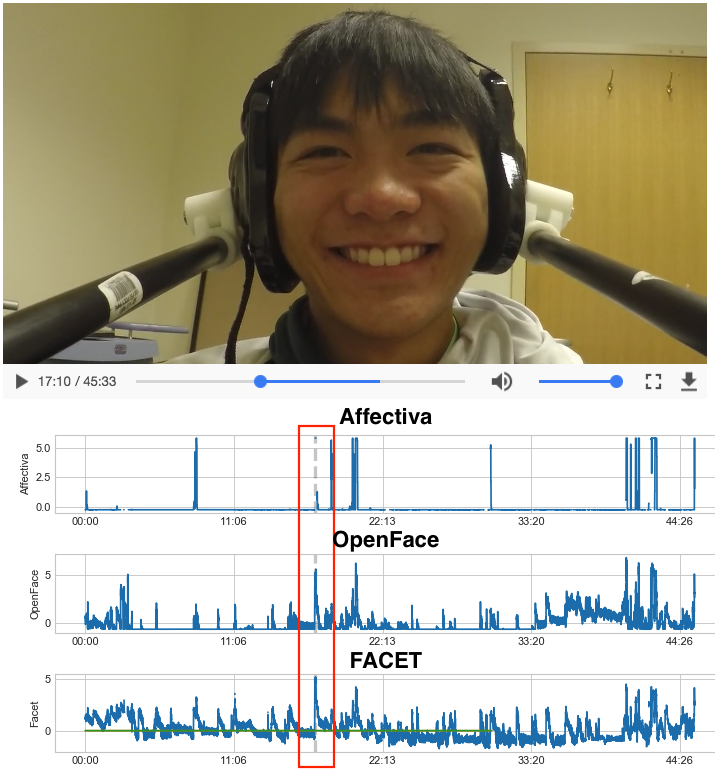

We can also zoom in on times where the predictions diverge between Affectiva and other algorithms.

In the figure above, the left plots show a case in which Affectiva detects a smile while OpenFace and FACET predict a moderate smile. This is in contrast to the plot on the right which is clearly a full blown smile that is detected by OpenFace and FACET. Affectiva, does seem to get it right but for some reason cannot continuously detect the smile surrounding the instance.

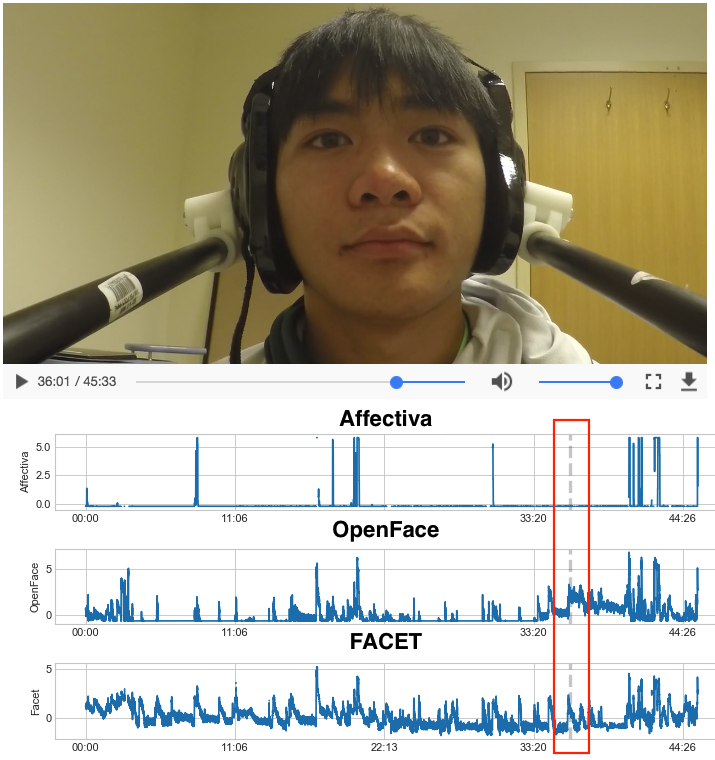

We can also look at times when Affectiva is missing detections.

In the left plot, Affectiva fails to detect a face and a smile while a moderate smile is detected by OpenFace and FACET. In the plot on the right, Affectiva once again misses out on a subtle smile that OpenFace and FACET detects.

Also see this article in Behavior Research Methods that also compares Affectiva to FACET.

Conclusion

Overall, it seems clear that Affectiva’s JavaScript API does not perform as well as OpenFace or FACET in emotion and facial expression detection. This is surprising given that Affectiva is known to have been trained on much more data and naturalistic data compared to OpenFace or FACET. However, one possibility is that the way I use their API as outlined in my tutorial may not be the optimal way to use their algorithm.

If you are interested in validation of the algorithms, check out this paper by Stockli and colleagues (2017) in Behavior Research Methods. They also find that the FACET engine outperforms Affectiva’s AFFDEX.

emotions facial-expressions affectiva affdex openface emotient facet imotions analysis data