Affectiva JavaScript Emotion SDK Tutorial

Affectiva is one of the leaders in emotion recognition technology. And they generously provide a version of their emotion recognition software (Affdex) in the form of Emotion SDKs. This means that people can use their patented technology to develop new apps and websites. They also have sample apps using the JavaScript SDK such as analyzing a photo or a camera stream.

Researchers can also use their SDK to extract emotion predictions from videos we recorded in lab settings for further analyses. This tutorial is designed to help those with a similar purpose.

To do this, I wrote a short html file that calls the Affectiva JavaScript Emotion SDK to extract emotion information from a video stored locally on my computer. Opening this html file, the app will automatically extract emotion features from the video and will save the results to my computer.

Step 1

Download and unzip the current version of the affectiva-api-app by clicking here or clone the repository.

git clone https://github.com/cosanlab/affectiva-api-app.git

Step 2

Move the video file you would like to process into the affectiva-api-app/data folder.

If you downloaded the zip file, your path would most likely be Downloads/affectiva-api-app-master/data

For simplicity, let’s say my video file is called sample_vid.mp4 which is

already in the data folder.

Step 3

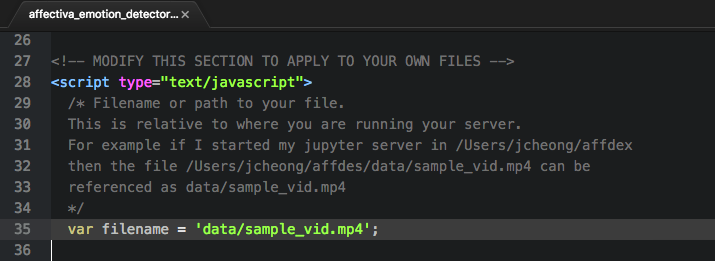

Open affectiva_emotion_detector.html in your favorite text editor (e.g., Atom, Sublime)

Scroll down to line 35, and change filename with the name of your video.

To process the sample video, we would leave it as data/sample_vid.mp4

Step 4

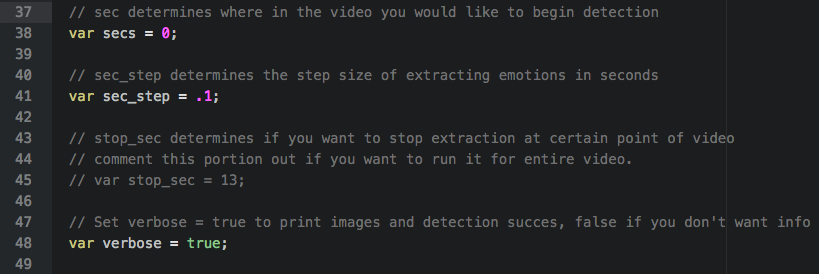

Modify additional parameters.

Below the filename, you can also adjust the parameters of the app such as

where in the video you want to start/stop processing and how fine-grained

you want the processing to be.

In this example, we start processing at time 0 (secs) increment by 100ms

(sec_step) until the end of the video.

Step 5

To run this html app, you need to start a webserver on your computer.

On MACs with Python installed, this is a simple process.

Open up Terminal and navigate to the ‘affectiva-api-app’ folder.

If the folder is in your downloads you can type the following in your Terminal.

cd

cd Downloads/affectiva-api-app

If you have Python already installed you can start the webserver using this command

python -m SimpleHTTPServer 8000

If this command replies with Serving HTTP on 0.0.0.0 port 8000 ... then you are good to go.

If this part is not working, you may need to install Python on your system

(I recommend downloading using Anaconda)

Step 6

Open your favorite web browser (e.g., Chrome, FireFox) and type in the address line

http://localhost:8000/

Which should now give you a list of files in that directory

Step 7

Now click on affectiva_emotion_detector.html and it will begin the extraction

and auto download the results in a json file as shown in the image below.

Step 8

Load the emotion predictions and play around!

import pandas as pd

df = pd.read_json('~/Downloads/data_sample_vid.json',lines=True)

Analysis

One VERY important thing to keep in mind is that the output does not save data when face detection fails. Therefore, I highly recommend resetting the index to make sure you know which timepoints have missing data.

This is easy to do in pandas with the following

import pandas as pd

import numpy as np

df = pd.read_json('~/Downloads/data_sample_vid.json',lines=True)

df.index= df.Timestamp.astype(float)

newindex = np.arange(0,df.Timestamp.max(),.1)

df = df.reindex(newindex,method='nearest',tolerance=.0001)

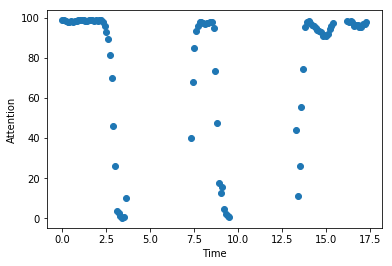

In the sample video, I rotated my head left and then right so there is definitely missing data at those times because face detection often fails on profile views. This is apparent when I plot one of the predictions such as attention Which shows missing data around the 5th and 11th second when my head is rotated.

Here is the full list of features that the app currently outputs

Timestamp

anger

attention

browFurrow

browRaise

cheekRaise

chinRaise

contempt

dimpler

disgust

engagement

eyeClosure

eyeWiden

fear

innerBrowRaise

jawDrop

joy

lidTighten

lipCornerDepressor

lipPress

lipPucker

lipStretch

lipSuck

mouthOpen

noseWrinkle

sadness

smile

smirk

surprise

upperLipRaise

valence

Conclusion

Obviously, this app can be improved with an option for batch processing and GUIs.

Please let me know if you would like to help out and making the app more

user friendly!

affectiva facial-expressions emotions analysis data